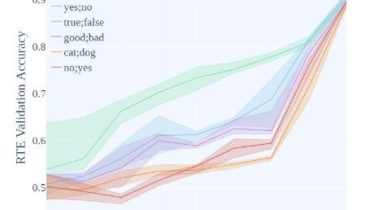

Do Prompt-Based Models Really Understand the Meaning of Their Prompts?

This repository accompanies our paper “Do Prompt-Based Models Really Understand the Meaning of Their Prompts?”

Usage

To replicate our results in Section 4, run:

python3 prompt_tune.py

--save-dir ../runs/prompt_tuned_sec4/

--prompt-path ../data/binary_NLI_prompts.csv

--experiment-name sec4

--few-shots 3,5,10,20,30,50,100,250

--production

--seeds 1

Add --fully-train if you want to train on the entire training set in addition to few-shot settings.

To replicate Section 5, run:

python3 prompt_tune.py

--save-dir ../runs/prompt_tuned_sec5/

--prompt-path ../data/binary_NLI_prompts_permuted.csv