Category: Video

Video Autoencoder: self-supervised disentanglement of 3D structure and motion

This repository contains the code (in PyTorch) for the model introduced in the following paper: Video Autoencoder: self-supervised disentanglement of 3D structure and motionZihang Lai, Sifei Liu, Alexi A. Efros, Xiaolong Wang ICCV, 2021[Paper] [Project Page] [12-min oral pres. video] [3-min supplemental video] Citation @inproceedings{Lai21a, title={Video Autoencoder: self-supervised disentanglement of 3D structure and motion}, author={Lai, Zihang and Liu, Sifei and Efros, Alexei A and Wang, Xiaolong}, booktitle={ICCV}, year={2021} }

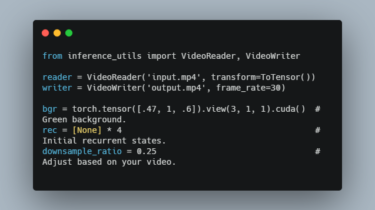

Read moreRobust Video Matting in PyTorch and TensorFlow

Official repository for the paper Robust High-Resolution Video Matting with Temporal Guidance. RVM is specifically designed for robust human video matting. Unlike existing neural models that process frames as independent images, RVM uses a recurrent neural network to process videos with temporal memory. RVM can perform matting in real-time on any videos without additional inputs. It achieves 4K 76FPS and HD 104FPS on an Nvidia GTX 1080 Ti GPU. The project was developed at ByteDance Inc. News [Aug 25 2021] […]

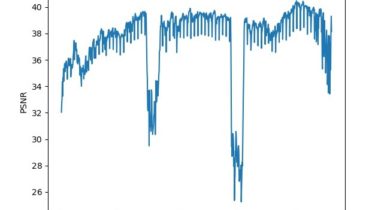

Read moreA fantastic work in Video-level Anomaly Detection

This is my codes that can visualize the psnr image in testing videos. Future Frame Prediction for Anomaly Detection – A New Baseline This is a fantastic work in Video-level Anomaly Detection, published in CVPR2018. ShanghaiTech svip-lab has given their work in [Github]. Moreover, this work also have an interesting video in [YouTube]. And we can see that when anomaly examples happened, PSNR images will have a low response. Such is an example in avenue dataset. Testing images through PSNR […]

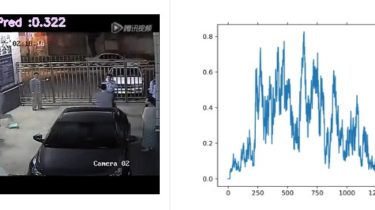

Read moreReal world Anomaly Detection in Surveillance Videos

This repository is a re-implementation of “Real-world Anomaly Detection in Surveillance Videos” with pytorch. As a result of our re-implementation, we achieved a much higher AUC than the original implementation. Datasets Download following data link and unzip under your $DATA_ROOT_DIR./workspace/DATA/UCF-Crime/all_rgbs Directory tree DATA/ UCF-Crime/ ../all_rgbs ../~.npy ../all_flows ../~.npy train_anomaly.txt train_normal.txt test_anomaly.txt test_normal.txt train-test script python main.py Reslut METHOD DATASET AUC Original paper(C3D two stream) UCF-Crimes 75.41 RTFM (I3D RGB) UCF-Crimes 84.03 Ours Re-implementation (I3D two stream) UCF-Crimes 84.45 Visualization Acknowledgment […]

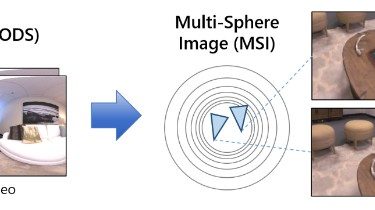

Read moreReal-time 6DoF Video View Synthesis using Multi-Sphere Images

MatryODShka Codes for the following paper: MatryODShka: Real-time 6DoF Video View Synthesis using Multi-Sphere ImagesBenjamin Attal, Selena Ling, Aaron Gokaslan, Christian Richardt, James TompkinECCV 2020 If you use these codes, please cite: @inproceedings{Attal:2020:ECCV, author = “Benjamin Attal and Selena Ling and Aaron Gokaslan and Christian Richardt and James Tompkin”, title = “{MatryODShka}: Real-time {6DoF} Video View Synthesis using Multi-Sphere Images”, booktitle = “European Conference on Computer Vision (ECCV)”, month = aug, year = “2020”, url = “https://visual.cs.brown.edu/matryodshka” } Note that […]

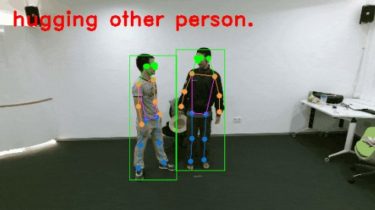

Read moreAn open-source toolbox for video understanding based on PyTorch

MMAction2 MMAction2 is an open-source toolbox for video understanding based on PyTorch. It is a part of the OpenMMLab project. The master branch works with PyTorch 1.3+.Action Recognition Results on Kinetics-400 Spatio-Temporal Action Detection Results on AVA-2.1 Skeleton-base Action Recognition Results on NTU-RGB+D-120 Major Features Modular design We decompose the video understanding framework into different components and one can easily construct a customized video understanding framework by combining different modules. Support for various datasets The toolbox directly supports multiple datasets, […]

Read moreTemporally-Sensitive Pretraining of Video Encoders for Localization Tasks

TSP TSP: Temporally-Sensitive Pretraining of Video Encoders for Localization Tasks This repository holds the source code, pretrained models, and pre-extracted features for the TSP method. Please cite this work if you find TSP useful for your research. @article{alwassel2020tsp, title={TSP: Temporally-Sensitive Pretraining of Video Encoders for Localization Tasks}, author={Alwassel, Humam and Giancola, Silvio and Ghanem, Bernard}, journal={arXiv preprint arXiv:2011.11479}, year={2020} } We provide pre-extracted features for ActivityNet v1.3 and THUMOS14 videos. The feature files are saved in H5 format, where we […]

Read moreSemi-supervised video object segmentation evaluation in python

MiVOS (CVPR 2021) – Mask Propagation [CVPR 2021] MiVOS – Mask Propagation module. Reproduced STM (and better) with training code . Semi-supervised video object segmentation evaluation. Ho Kei Cheng, Yu-Wing Tai, Chi-Keung Tang New! See our new STCN for a better and faster algorithm. This repo implements an improved version of the Space-Time Memory Network (STM) and is part of the accompanying code of Modular Interactive Video Object Segmentation: Interaction-to-Mask, Propagation and Difference-Aware Fusion (MiVOS). It can be used as: […]

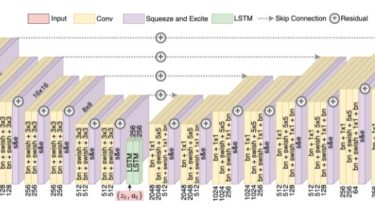

Read moreImplementation of FitVid video prediction model in JAX/Flax

FitVid Video Prediction Model Implementation of FitVid video prediction model in JAX/Flax. If you find this code useful, please cite it in your paper: @article{babaeizadeh2021fitvid, title={FitVid: Overfitting in Pixel-Level Video Prediction}, author= {Babaeizadeh, Mohammad and Saffar, Mohammad Taghi and Nair, Suraj and Levine, Sergey and Finn, Chelsea and Erhan, Dumitru}, journal={arXiv preprint arXiv:2106.13195}, year={2020} } Method FitVid is a new architecture for conditional variational video prediction. It has ~300 million parameters and can be trained with minimal training tricks. Sample […]

Read more