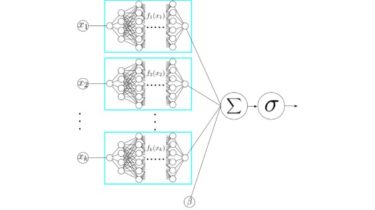

Unofficial PyTorch implementation of Neural Additive Models (NAM)

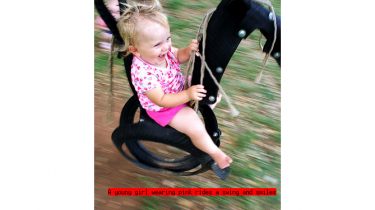

nam-pytorch Unofficial PyTorch implementation of Neural Additive Models (NAM) by Agarwal, et al. [abs, pdf] Installation You can access nam-pytorch via pip: $ pip install nam-pytorch Usage import torch from nam_pytorch import NAM nam = NAM( num_features=784, link_func=”sigmoid” ) images = torch.rand(32, 784) pred = nam(images) # [32, 1] GitHub https://github.com/rish-16/nam-pytorch

Read more