Category: Natural Language Processing

Detecting Gender Biases in NLP

Source: Times Higher Education

Read moreNLP-Entity Coreference Resolution

Explore multiple libraries

Read moreNLP-Custom Named Entity Recognition

Spacy and transformers : Complete Repo

Read moreTop Natural Language Processing Algorithms

https://Freepik.org

Read moreNLP-Knowledge Graph

Explore different libraries and create production ready code

Read moreBuilding AI Products Using Large Language Models

How to effectively and efficiently build AI products using Large Language Models

Read moreA Glance of Natural Language Understanding System

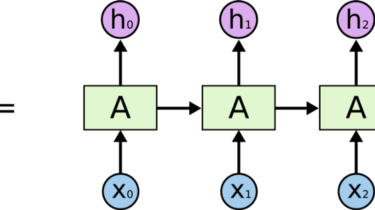

These years, there has been widely speared applications employing Natural Language Processing (NLP) technologies, including question answering system, Machine reading comprehension, summarization, dialogue, autocompletion, machine translation, etc.From 2013 to 2014, the applications of various neural network models on NLP have gradually increased, among which the most widely used are: convolutional neural networks (CNN), recurrent neural networks (RNN), and structural recurrent neural networks (SRN). Since the text is ordered, the sequential structure of RNN (recurrent neural network) makes it the […]

Read moreBERT Explained

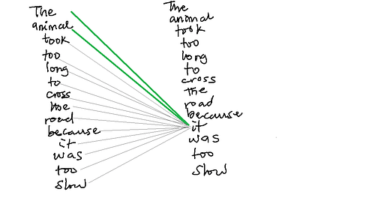

The continuous innovation around contextual understanding of sentences has expanded significant bounds in NLP. The general idea of Transformer architecture is based on self-attention proposed in Attention is All You Need paper 2017.Self-attention is learning to weigh the relationship between each item/word to

Read moreAbstractive text summarization with transformer-based models

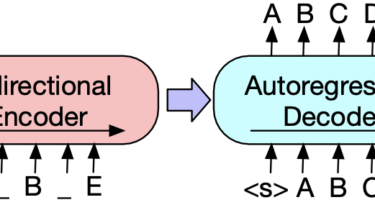

Text summarization is a text generation task, which generates a concise and precise summary of input texts. There are two kinds of summarization tasks in Natural Language Processing, one is the extractive approach, which is to identify the most important sentences or phrases in the original text and combine them to make a summary. The more advanced approach is the abstractive approach, which generates new phrases and sentences to represent the information from the input text.

Read more