A Gentle Introduction to Exponential Smoothing for Time Series Forecasting in Python

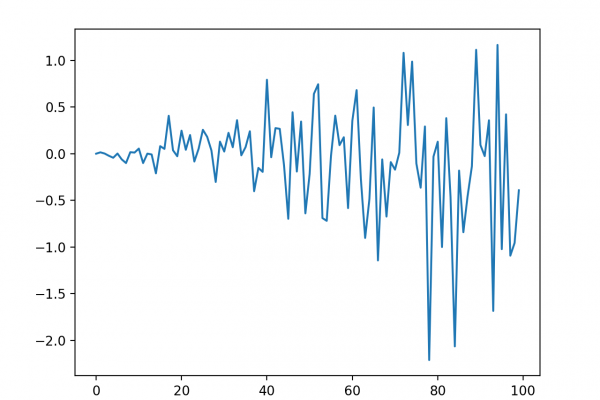

Last Updated on April 12, 2020 Exponential smoothing is a time series forecasting method for univariate data that can be extended to support data with a systematic trend or seasonal component. It is a powerful forecasting method that may be used as an alternative to the popular Box-Jenkins ARIMA family of methods. In this tutorial, you will discover the exponential smoothing method for univariate time series forecasting. After completing this tutorial, you will know: What exponential smoothing is and how […]

Read more