ProtoAttend: Attention-Based Prototypical Learning

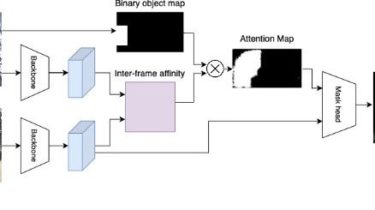

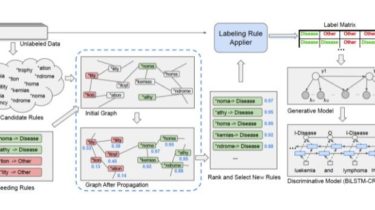

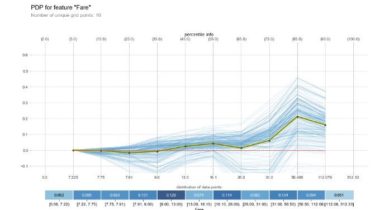

Authors: Sercan O. Arik and Tomas Pfister Paper: Sercan O. Arik and Tomas Pfister, “ProtoAttend: Attention-Based Prototypical Learning”Link: https://arxiv.org/abs/1902.06292 We propose a novel inherently interpretable machine learning method that bases decisions on few relevant examples that we call prototypes. Our method, ProtoAttend, can be integrated into a wide range of neural network architectures including pre-trained models. It utilizes an attention mechanism that relates the encoded representations to samples in order to determine prototypes. The resulting model outperforms state of the […]

Read more