How to Calculate Parametric Statistical Hypothesis Tests in Python

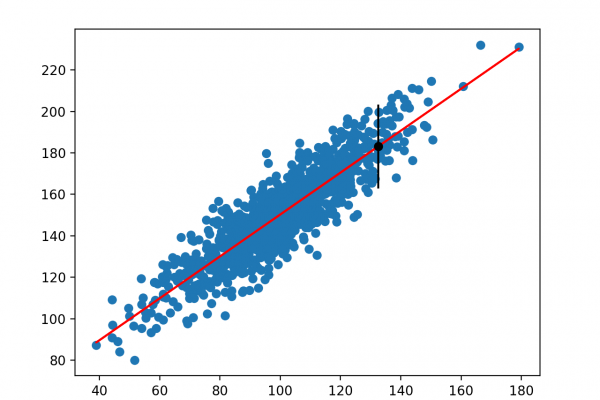

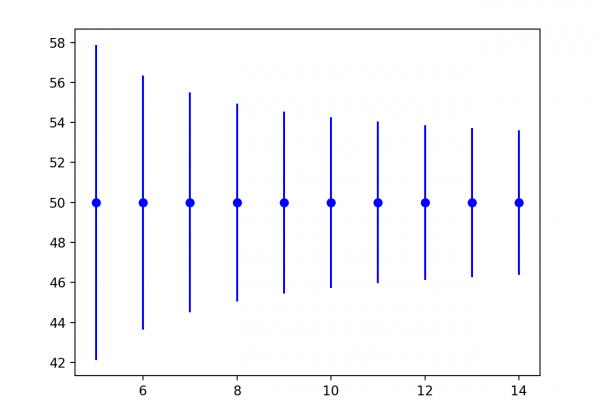

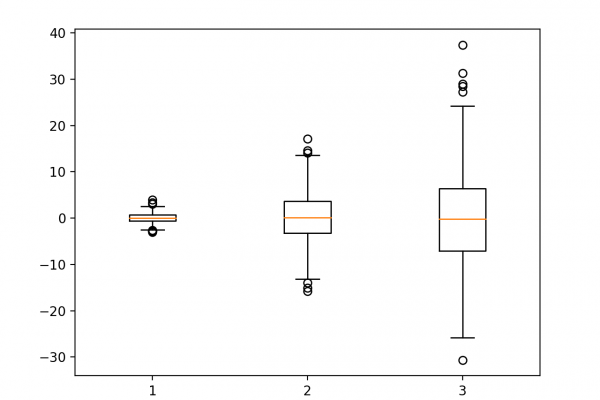

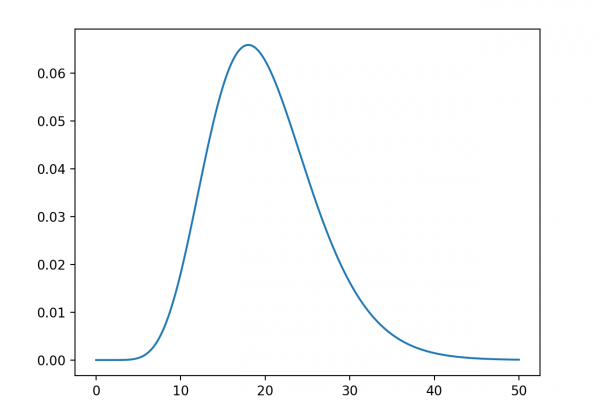

Last Updated on August 8, 2019 Parametric statistical methods often mean those methods that assume the data samples have a Gaussian distribution. in applied machine learning, we need to compare data samples, specifically the mean of the samples. Perhaps to see if one technique performs better than another on one or more datasets. To quantify this question and interpret the results, we can use parametric hypothesis testing methods such as the Student’s t-test and ANOVA. In this tutorial, you will […]

Read more