Attention mechanism with MNIST dataset

MNIST_AttentionMap

[TensorFlow] Attention mechanism with MNIST dataset

Usage

$ python run.py

Result

Training

Loss graph.

Test

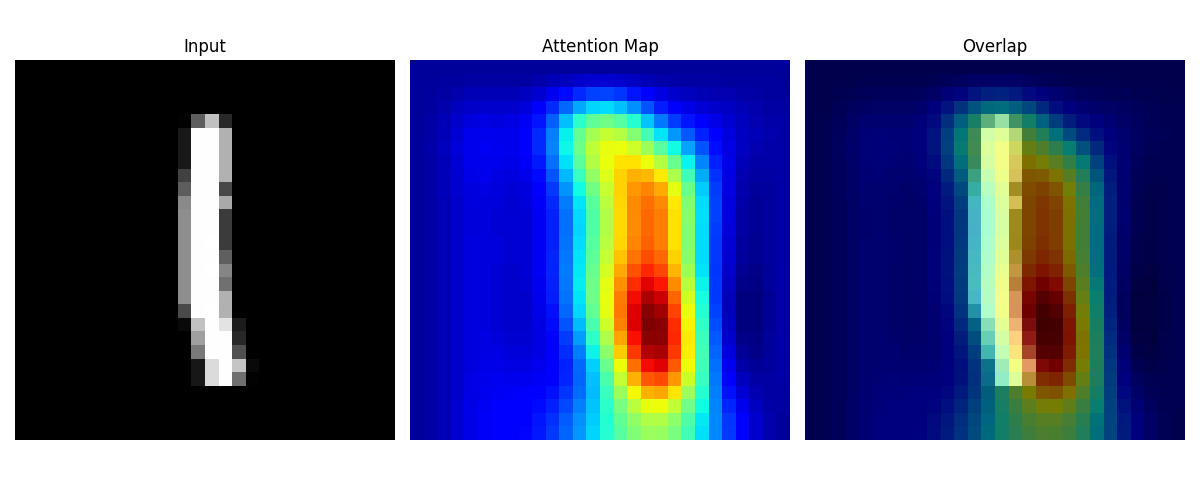

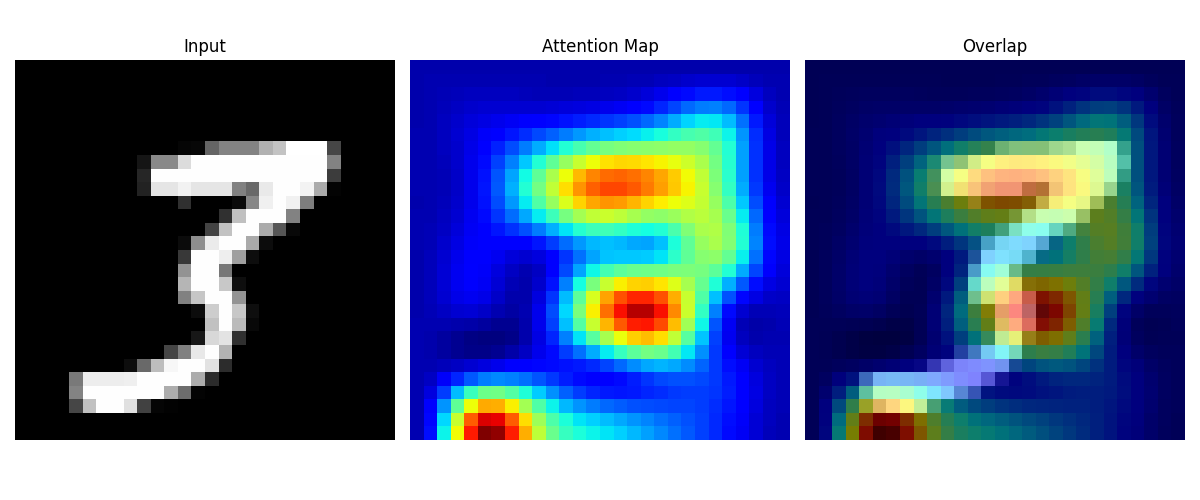

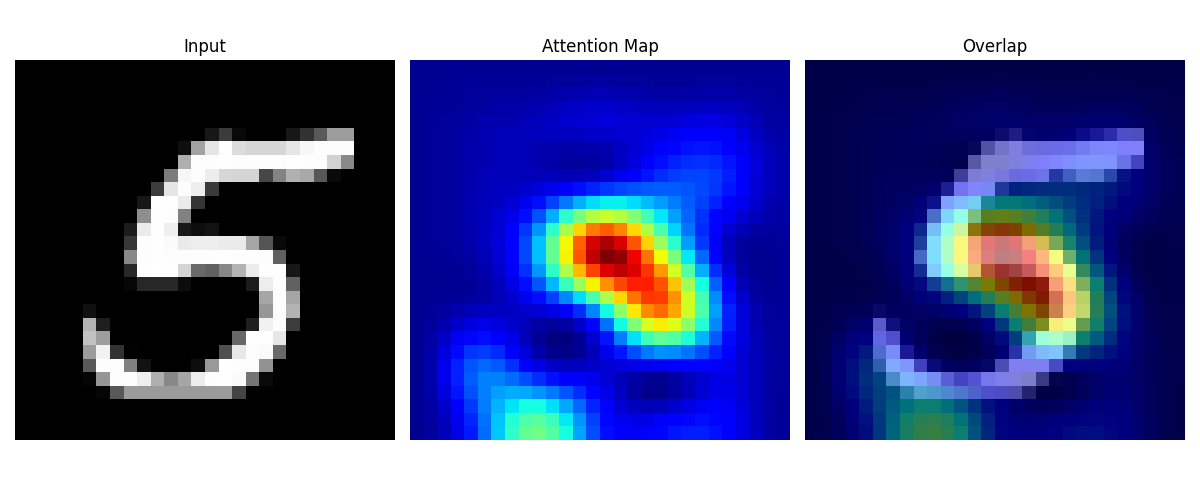

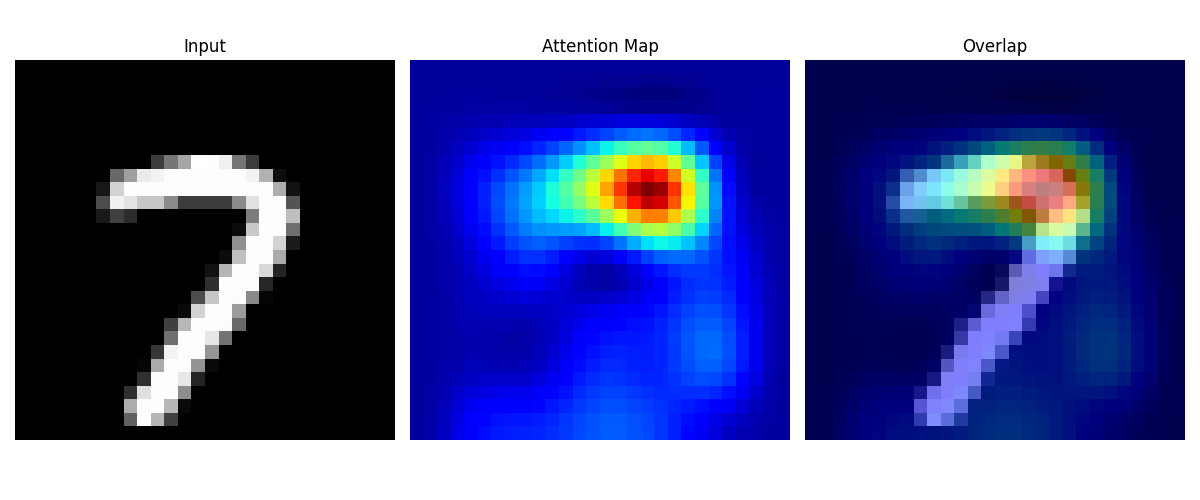

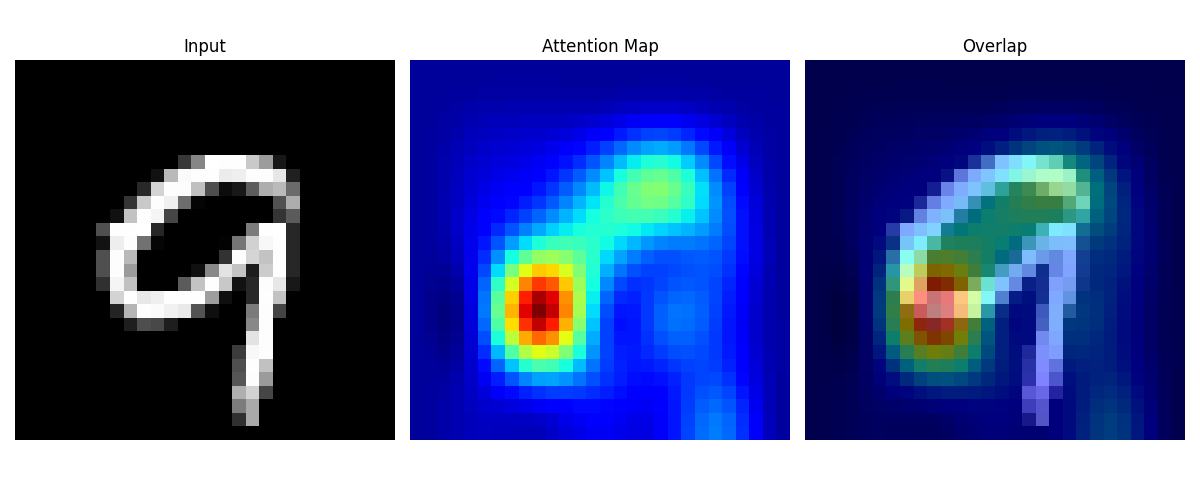

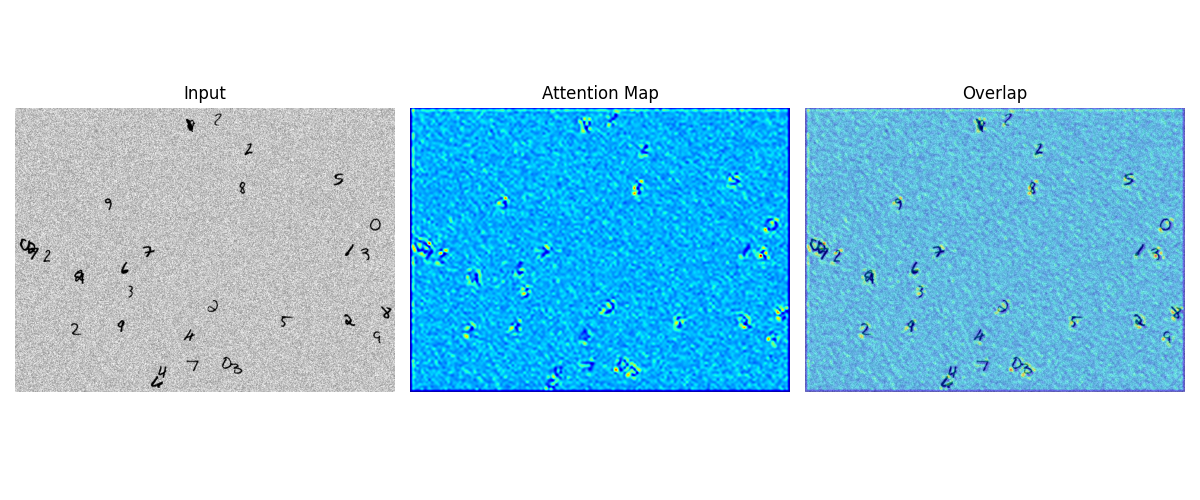

Each figure shows input digit, attention map, and overlapped image sequentially.

Further usage

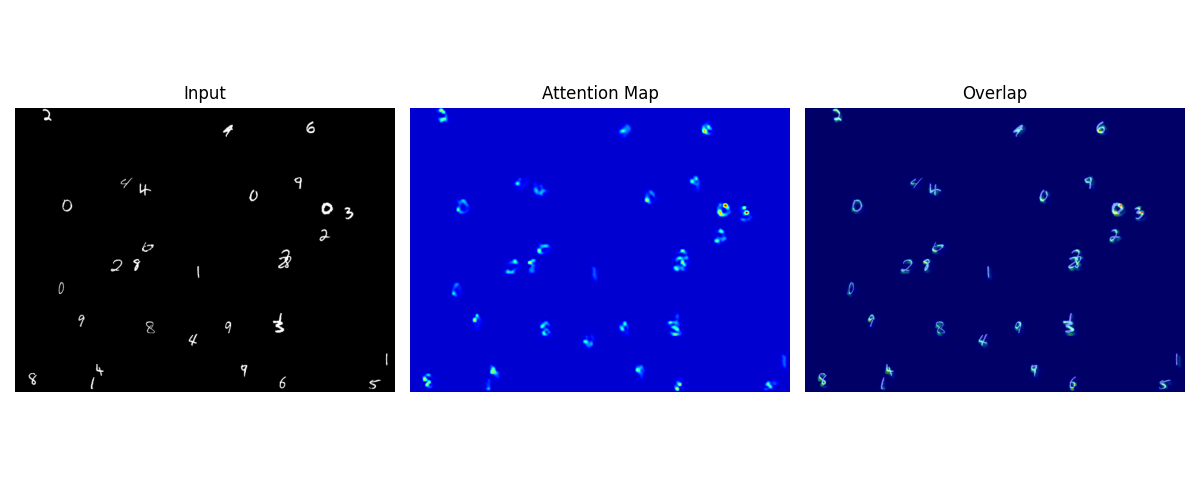

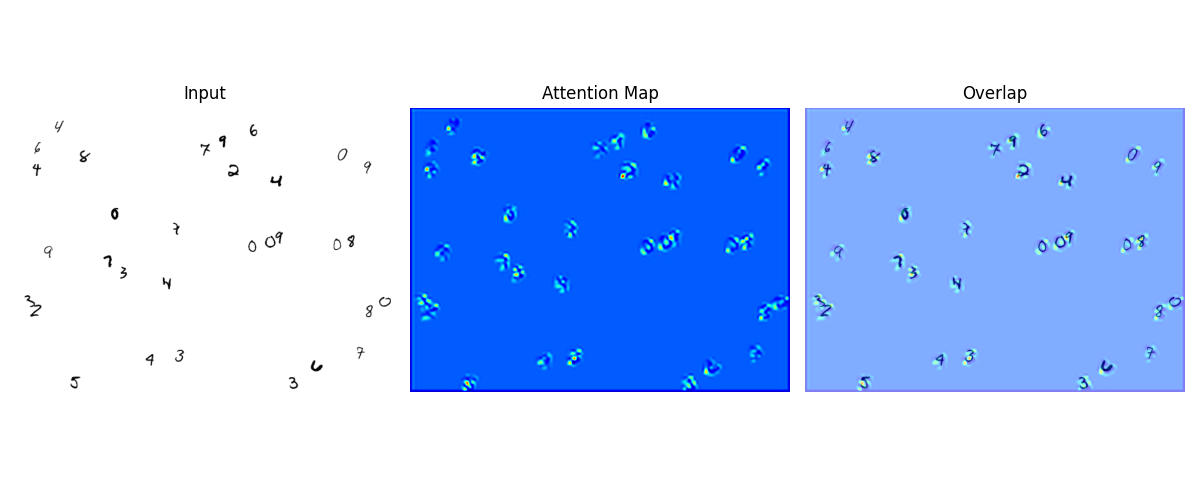

The further usages. Detecting the location of digits can be conducted using an attention map.

Requirements

- TensorFlow 2.3.0

- Numpy 1.18.5

GitHub