Dynamic Classifier Selection Ensembles in Python

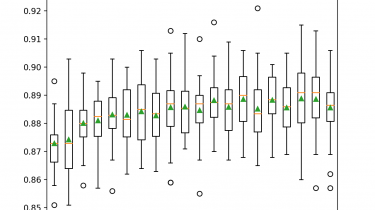

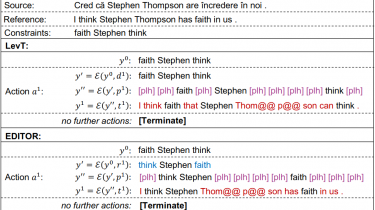

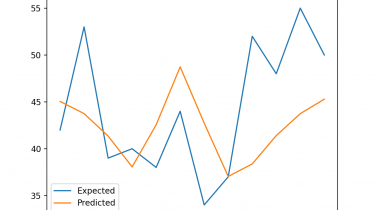

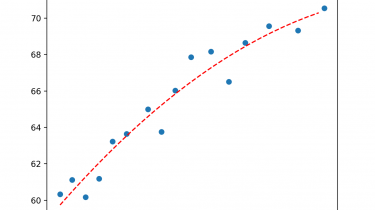

Dynamic classifier selection is a type of ensemble learning algorithm for classification predictive modeling. The technique involves fitting multiple machine learning models on the training dataset, then selecting the model that is expected to perform best when making a prediction, based on the specific details of the example to be predicted. This can be achieved using a k-nearest neighbor model to locate examples in the training dataset that are closest to the new example to be predicted, evaluating all models […]

Read more