Hugging Face – 🤗Hugging Face Newsletter Issue #2 – Sep 11th 2020

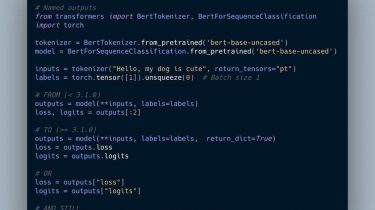

News Transformers gets a new release: v3.1.0 This new version is the first PyPI release to feature: The PEGASUS models, the current State-of-the-Art in summarization DPR, for open-domain Q&A research mBART, a multilingual encoder-decoder model trained using the BART objective Alongside the three new models, we are also releasing a long-awaited feature: “named outputs”. By passing return_dict=True, model outputs can now be accessed as named values as well as by

Read more