Implementation of Attention Mechanism for Caption Generation on Transformers using TensorFlow

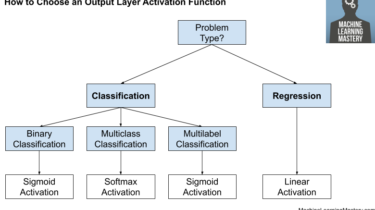

Overview Learning about the state of the art model that is Transformers. Understand how we can implement Transformers on the already seen image captioning problem using Tensorflow Comparing the results of Transformers vs attention models. Introduction We have seen that Attention mechanisms (in the previous article) have become an integral part of compelling sequence modeling and transduction models in various tasks (such as image captioning), allowing modeling of dependencies without regard to their distance in the input or output […]

Read more