Issue #113 – Optimising Transformer for Low-Resource Neural Machine Translation

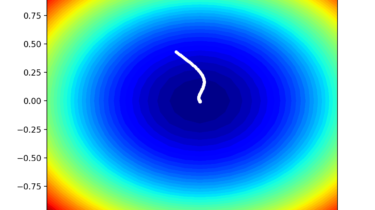

14 Jan21 Issue #113 – Optimising Transformer for Low-Resource Neural Machine Translation Author: Dr. Jingyi Han, Machine Translation Scientist @ Iconic Introduction The lack of parallel training data has always been a big challenge when building neural machine translation (NMT) systems. Most approaches address the low-resource issue in NMT by exploiting more parallel or comparable corpora. Recently, several studies show that instead of adding more data, optimising NMT systems could also be helpful to improve translation quality for low-resource language […]

Read more