A Gentle Introduction to the BFGS Optimization Algorithm

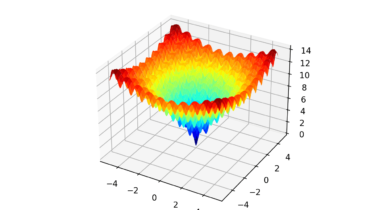

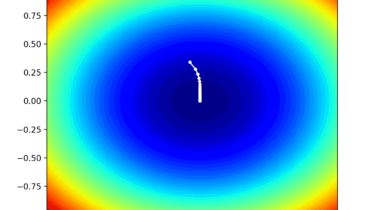

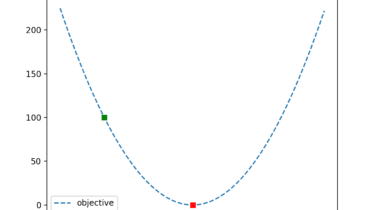

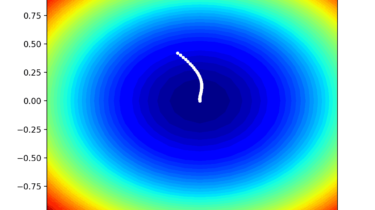

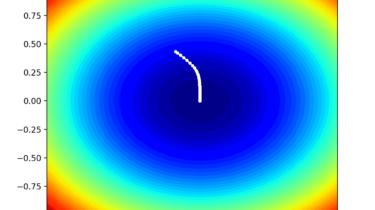

The Broyden, Fletcher, Goldfarb, and Shanno, or BFGS Algorithm, is a local search optimization algorithm. It is a type of second-order optimization algorithm, meaning that it makes use of the second-order derivative of an objective function and belongs to a class of algorithms referred to as Quasi-Newton methods that approximate the second derivative (called the Hessian) for optimization problems where the second derivative cannot be calculated. The BFGS algorithm is perhaps one of the most widely used second-order algorithms for […]

Read more