6 Dimensionality Reduction Algorithms With Python

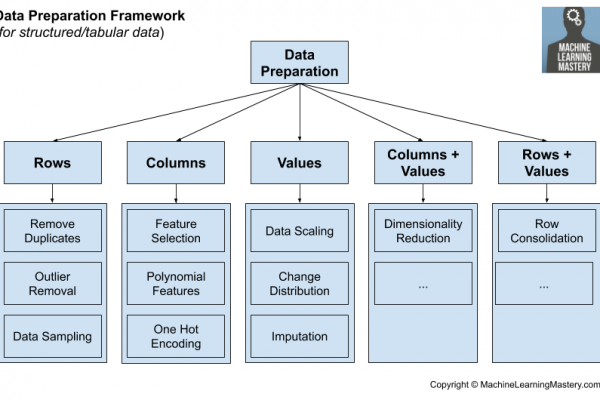

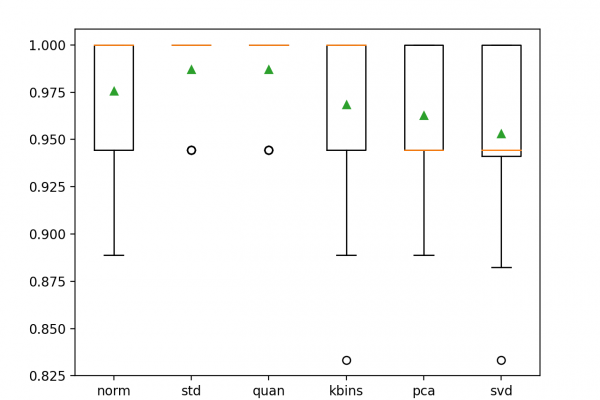

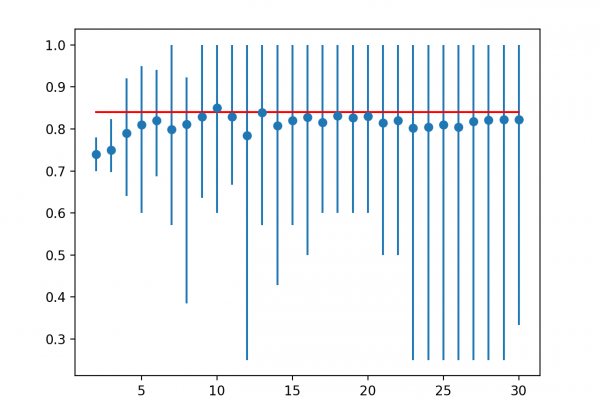

Last Updated on August 17, 2020 Dimensionality reduction is an unsupervised learning technique. Nevertheless, it can be used as a data transform pre-processing step for machine learning algorithms on classification and regression predictive modeling datasets with supervised learning algorithms. There are many dimensionality reduction algorithms to choose from and no single best algorithm for all cases. Instead, it is a good idea to explore a range of dimensionality reduction algorithms and different configurations for each algorithm. In this tutorial, you […]

Read more