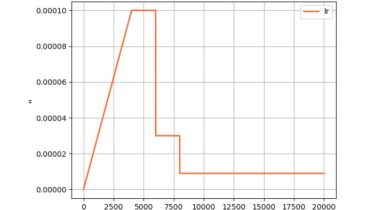

PyTorch implementation of some learning rate schedulers for deep learning researcher

pytorch-lr-scheduler PyTorch implementation of some learning rate schedulers for deep learning researcher. Usage WarmupReduceLROnPlateauScheduler import torch from lr_scheduler.warmup_reduce_lr_on_plateau_scheduler import WarmupReduceLROnPlateauScheduler if __name__ == ‘__main__’: max_epochs, steps_in_epoch = 10, 10000 model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))] optimizer = torch.optim.Adam(model, 1e-10) scheduler = WarmupReduceLROnPlateauScheduler( optimizer, init_lr=1e-10, peak_lr=1e-4, warmup_steps=30000, patience=1, factor=0.3, ) for epoch in range(max_epochs): for timestep in range(steps_in_epoch): … … if timestep < warmup_steps: scheduler.step() val_loss = validate() scheduler.step(val_loss) TransformerLRScheduler import torch from lr_scheduler.transformer_lr_scheduler import TransformerLRScheduler if __name__ == '__main__': max_epochs, steps_in_epoch [...]

Read more