RESTful API for encoding/decoding messages into/from images

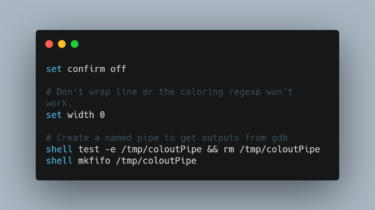

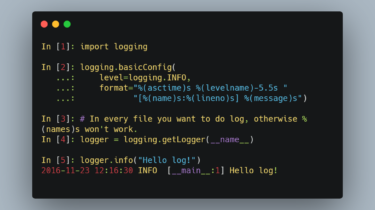

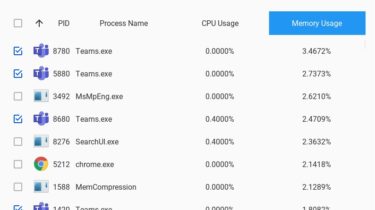

ImageSecrets RESTful API for encoding/decoding messages into/from images. Tech/framework used Python 3.9 FastAPI PostgreSQL with Tortoise ORM Docker Heroku Features Image steganography to encode and decode messages User management and authentication Great documentation via OpenAPI What I’ve learned Python – Improved knowledge of testing, asynchronous development, ORMs, coverage FastAPI – Gained great experience with the framework NumPy – Used for reading and editing image pixel data Databases – Learned how to use SQLAlchemy and Tortoise ORM for asynchronous database development […]

Read more