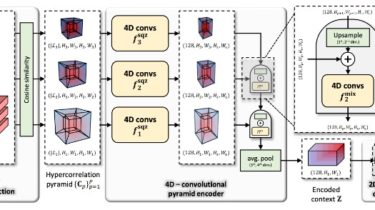

Hypercorrelation Squeeze for Few-Shot Segmentation

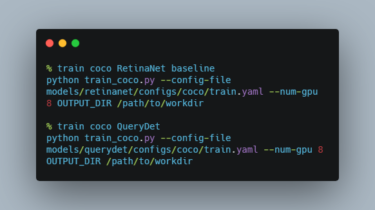

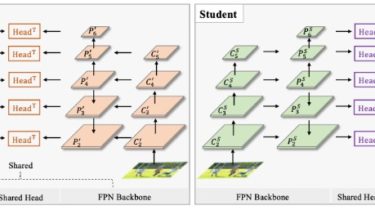

Hypercorrelation Squeeze for Few-Shot Segmentation This is the implementation of the paper “Hypercorrelation Squeeze for Few-Shot Segmentation” by Juhong Min, Dahyun Kang, and Minsu Cho. Implemented on Python 3.7 and Pytorch 1.5.1. For more information, check out project [website] and the paper on [arXiv]. Requirements Python 3.7 PyTorch 1.5.1 cuda 10.1 tensorboard 1.14 Conda environment settings: conda create -n hsnet python=3.7 conda activate hsnet conda install pytorch=1.5.1 torchvision cudatoolkit=10.1 -c pytorch conda install -c conda-forge tensorflow pip install tensorboardX Preparing […]

Read more