Rotate Yolov5 with adjustments to enable rotate prediction boxes

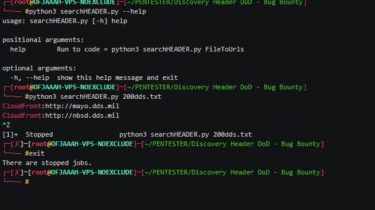

This repository is based on Ultralytics/yolov5, with adjustments to enable rotate prediction boxes. The codes are based on Ultralytics/yolov5, and several functions are added and modified to enable rotate prediction boxes. The modifications compared with Ultralytics/yolov5 and their brief descriptions are summarized below: data/rotate_ucas.yaml : Exemplar UCAS-AOD dataset to test the effects of rotate boxes data/images/UCAS-AOD : For the inference of rotate-yolov5s-ucas.pt models/common.py : 3.1. class Rotate_NMS : Non-Maximum Suppression (NMS) module for Rotate Boxes 3.2. class Rotate_AutoShape : Rotate […]

Read more