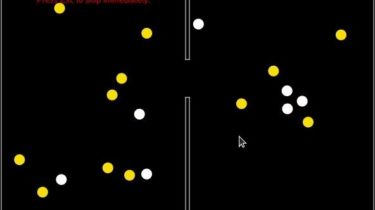

A simple keyboard game

Powered by Taichi.a simple keyboard game This is hw2 of Taichi course, as a basic exercise of class. Rigid 2d bodies and resolve collision Rigid bodies are non-deformable self-defined shapes, with no squashing or stretching allowed. We provide a class Rigid2dBodies as general rigid bodies, and 2 shapes: Circles and AABB(Axis Aligned Bounding Boxes) as specific examples. We care about the collision between rigid bodies. Specifically, Impulse will be calculated and applied. Sinking of objects are not dealt with due […]

Read more