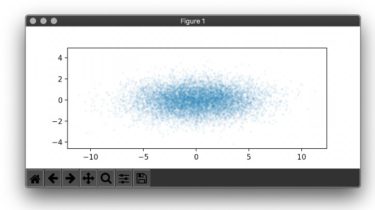

Forecasting for knowable future events using Bayesian informative priors

judgyprophet is a Bayesian forecasting algorithm based on Prophet, that enables forecasting while using information known by the business about future events. The aim is to enable users to perform forecasting with judgmental adjustment, in a way that is mathematically as sound as possible. Some events will have a big effect on your timeseries. Some of which you are aware of ahead of time. For example: An existing product entering a new market. A price change to a product. These […]

Read more