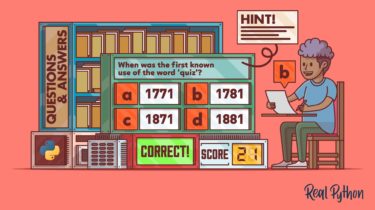

Build a Quiz Application With Python

In this tutorial, you’ll build a Python quiz application for the terminal. The word quiz was first used in 1781 to mean eccentric person. Nowadays, it’s mostly used to describe short tests of trivia or expert knowledge with questions like the following: When was the first known use of the word quiz? By following along in this step-by-step project, you’ll build an application that can test a person’s expertise on a range of topics. You can use this project to […]

Read more