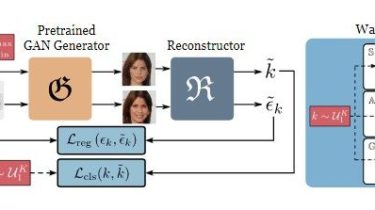

WarpedGANSpace: Finding non-linear RBF paths in GAN latent space

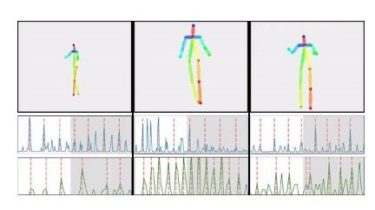

Authors official PyTorch implementation of the WarpedGANSpace: Finding non-linear RBF paths in GAN latent space (ICCV 2021). If you use this code for your research, please cite our paper. Overview In this work, we try to discover non-linear interpretable paths in GAN latent space. For doing so, we model non-linear paths using RBF-based warping functions, which by warping the latent space, endow it with vector fields (their gradients). We use the latter to traverse the latent space across the paths […]

Read more