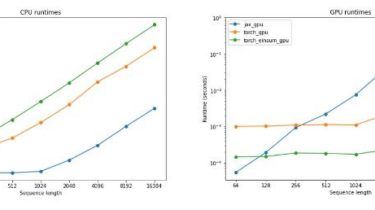

A small framework mimics PyTorch using CuPy or NumPy

CuPyTorch是一个小型PyTorch,名字来源于: 不同于已有的几个使用NumPy实现PyTorch的开源项目,本项目通过CuPy支持cuda计算 发音与Cool PyTorch接近,因为使用不超过1000行纯Python代码实现PyTorch确实很cool CuPyTorch支持numpy和cupy两种计算后端,实现大量PyTorch常用功能,力求99%兼容PyTorch语法语义,并能轻松扩展,以下列出已经完成的功能: cloc的代码统计结果: Language files blank comment code Python 22 353 27 992 自动微分示例:

Read more