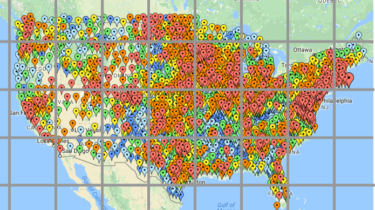

Clustering on maps with Elasticsearch

This article explains the process of clusterization on the map using Elasticsearch and Python. What is clusterization? Let’s imagine that we have 10 million geo-points of businesses in the United States. It’s impossible to show them all as separated pins on a map, let alone make it readable. Instead, we want to display groups with the number of businesses based on their region.

Read more