Neural Machine Translation — Using seq2seq with Keras

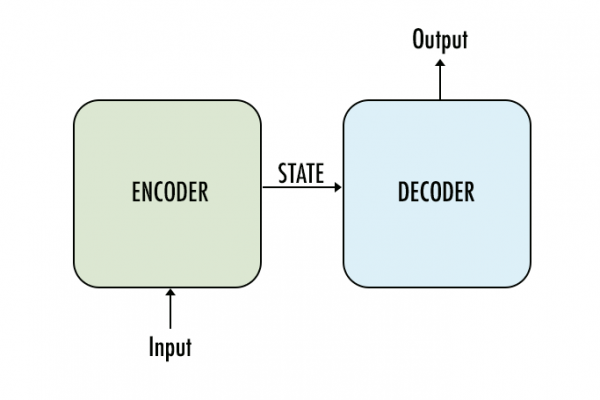

Firstly we will go about training the network. Then we will look at the inference models on how to translate a given English sentence to French. Inference model (used for predicting on the input sequence) has a slightly different decoder architecture and we will discuss that in detail when we come there.

Read more