Bi-encoder based entity linker for japanese with python

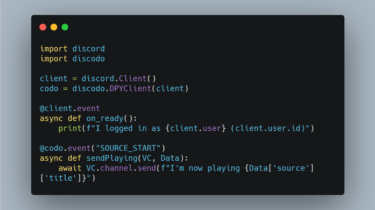

jel: Japanese Entity Linker jel – Japanese Entity Linker – is Bi-encoder based entity linker for japanese. Currently, link and question methods are supported. el.link This returnes named entity and its candidate ones from Wikipedia titles. from jel import EntityLinker el = EntityLinker() el.link(‘今日は東京都のマックにアップルを買いに行き、スティーブジョブスとドナルドに会い、堀田区に引っ越した。’) >> [ { “text”: “東京都”, “label”: “GPE”, “span”: [ 3, 6 ], “predicted_normalized_entities”: [ [ “東京都庁”, 0.1084 ], [ “東京”, 0.0633 ], [ “国家地方警察東京都本部”, 0.0604 ], [ “東京都”, 0.0598 ], … ] }, { “text”: “アップル”, […]

Read more