Real world Anomaly Detection in Surveillance Videos

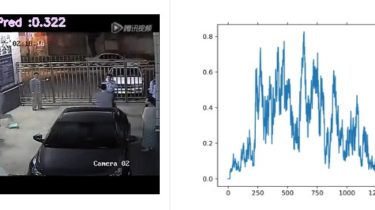

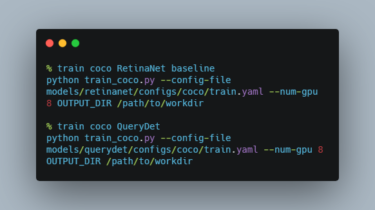

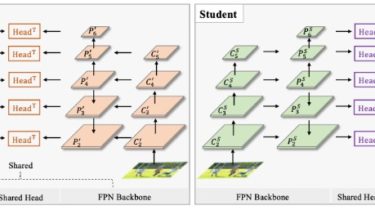

This repository is a re-implementation of “Real-world Anomaly Detection in Surveillance Videos” with pytorch. As a result of our re-implementation, we achieved a much higher AUC than the original implementation. Datasets Download following data link and unzip under your $DATA_ROOT_DIR./workspace/DATA/UCF-Crime/all_rgbs Directory tree DATA/ UCF-Crime/ ../all_rgbs ../~.npy ../all_flows ../~.npy train_anomaly.txt train_normal.txt test_anomaly.txt test_normal.txt train-test script python main.py Reslut METHOD DATASET AUC Original paper(C3D two stream) UCF-Crimes 75.41 RTFM (I3D RGB) UCF-Crimes 84.03 Ours Re-implementation (I3D two stream) UCF-Crimes 84.45 Visualization Acknowledgment […]

Read more