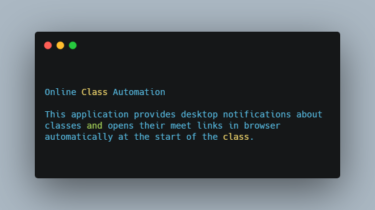

A simple scheduler tool that provides desktop notifications about classes

Online Class Automation This application provides desktop notifications about classes and opens their meet links in browser automatically at the start of the class. It works both in windows and linux. But runs better in linux when used with cron. Code Overview class-data.json: Stores the timetable in simple json format. Specify the name and meet link of classes with their timings according to your timetable. The time of a class is specified by day and hour. Day ranges from 0 […]

Read more