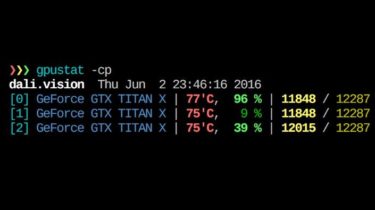

A simple command-line utility for querying and monitoring GPU status

Just less than nvidia-smi? NOTE: This works with NVIDIA Graphics Devices only, no AMD support as of now. Contributions are welcome! Self-Promotion: A web interface of gpustat is available (in alpha)! Check out gpustat-web. Usage $ gpustat Options: –color : Force colored output (even when stdout is not a tty) –no-color : Suppress colored output -u, –show-user : Display username of the process owner -c, –show-cmd : Display the process name -f, –show-full-cmd : Display full command and cpu stats […]

Read more