My notes on Data structure and Algos in golang implementation and python

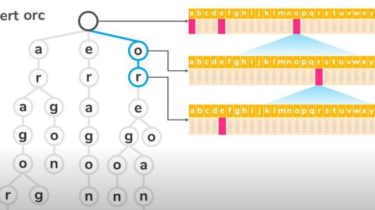

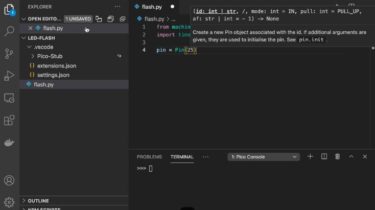

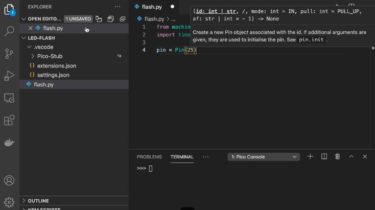

My notes on Data structure and Algos in golang implementation and python Arrays Advantages of array Reading and writing is O(1) Disadvantages of array Insertion and deletion is O(n) Arrays are not dynamic If you need to store an extra element, you would have to create a new array and copy all the elements over. O(n) List Slicing in python [start:stop:step] Reverse a list list[::-1] LinkedList Linked lists are great for problems that require arbitrary insertion. Dynamic arrays allow inserting […]

Read more