SMOTE for Imbalanced Classification with Python

Last Updated on August 21, 2020

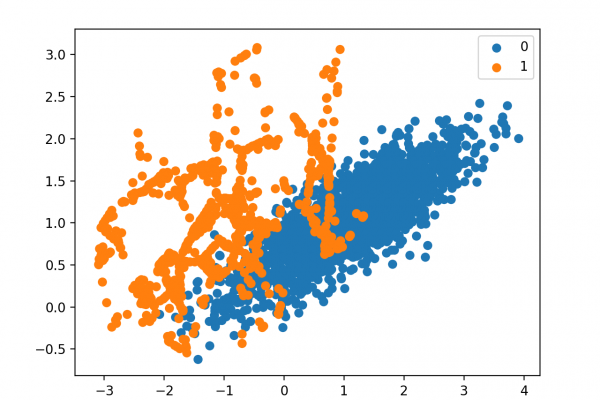

Imbalanced classification involves developing predictive models on classification datasets that have a severe class imbalance.

The challenge of working with imbalanced datasets is that most machine learning techniques will ignore, and in turn have poor performance on, the minority class, although typically it is performance on the minority class that is most important.

One approach to addressing imbalanced datasets is to oversample the minority class. The simplest approach involves duplicating examples in the minority class, although these examples don’t add any new information to the model. Instead, new examples can be synthesized from the existing examples. This is a type of data augmentation for the minority class and is referred to as the Synthetic Minority Oversampling Technique, or SMOTE for short.

In this tutorial, you will discover the SMOTE for oversampling imbalanced classification datasets.

After completing this tutorial, you will know:

- How the SMOTE synthesizes new examples for the minority class.

- How to correctly fit and evaluate machine learning models on SMOTE-transformed training datasets.

- How to use extensions of the SMOTE that generate synthetic examples along the class decision boundary.

Kick-start your project with my new book Imbalanced Classification with

To finish reading, please visit source site