Word Level English to Marathi Neural Machine Translation using Encoder-Decoder Model

The LSTM reads the data one sequence after the other. Thus if the input is a sequence of length ‘k’, we say that LSTM reads it in ‘k’ time steps (think of this as a for loop with ‘k’ iterations).

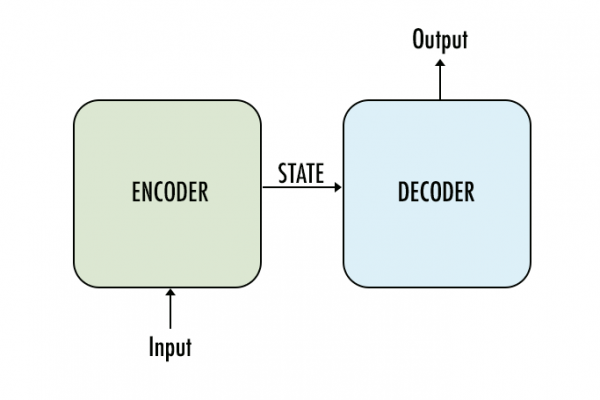

Referring to the