A novel evolutionary computation framework for rapid prototyping and testing of ideas

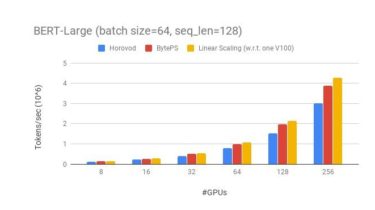

DEAP is a novel evolutionary computation framework for rapid prototyping and testing of ideas. It seeks to make algorithms explicit and data structures transparent. It works in perfect harmony with parallelisation mechanisms such as multiprocessing and SCOOP. DEAP includes the following features: Genetic algorithm using any imaginable representation List, Array, Set, Dictionary, Tree, Numpy Array, etc. Genetic programing using prefix trees Loosely typed, Strongly typed Automatically defined functions Evolution strategies (including CMA-ES) Multi-objective optimisation (NSGA-II, NSGA-III, SPEA2, MO-CMA-ES) Co-evolution (cooperative […]

Read more