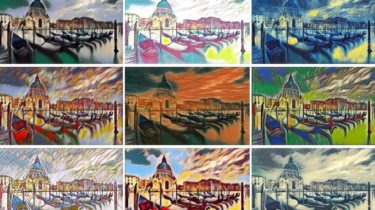

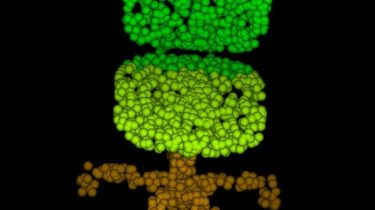

Neural Style and MSG-Net in PyTorch

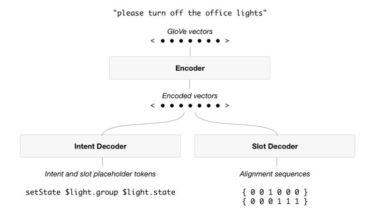

This repo provides PyTorch Implementation of MSG-Net (ours) and Neural Style (Gatys et al. CVPR 2016), which has been included by ModelDepot. We also provide Torch implementation and MXNet implementation. Tabe of content MSG-Net Multi-style Generative Network for Real-time Transfer [arXiv] [project] Hang Zhang, Kristin Dana @article{zhang2017multistyle, title={Multi-style Generative Network for Real-time Transfer}, author={Zhang, Hang and Dana, Kristin}, journal={arXiv preprint arXiv:1703.06953}, year={2017} } Stylize Images Using Pre-trained MSG-Net Download the pre-trained model

Read more