Use pretrained transformers like BERT, XLNet and GPT-2 in spaCy

This package provides spaCy components and architectures to use transformer models via Hugging Face’s transformers in spaCy. The result is convenient access to state-of-the-art transformer architectures, such as BERT, GPT-2, XLNet, etc.

This release requires spaCy v3. For the previous version of this library, see the

v0.6.xbranch.

Features

- Use pretrained transformer models like BERT, RoBERTa and XLNet to power your spaCy pipeline.

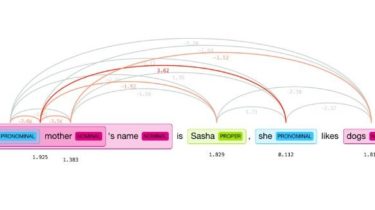

- Easy multi-task learning: backprop to one transformer model from several pipeline components.

- Train using spaCy v3’s powerful and extensible