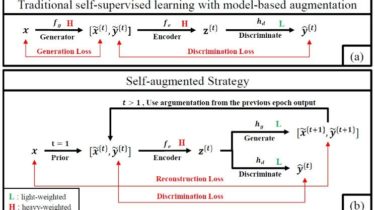

SAS: Self-Augmentation Strategy for Language Model Pre-training

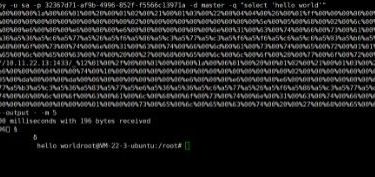

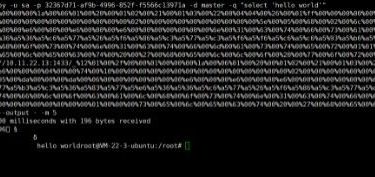

This repository contains the official pytorch implementation for the paper “SAS: Self-Augmentation Strategy for Language Model Pre-training” based on Huggingface transformers version 4.3.0. Only the SAS without the disentangled attention mechanism is released for now. To be updated. File structure train.py: The file for pre-training. run_glue.py: The file for finetuning. models modeling_sas.py: The main algorithm for the SAS. trainer_sas.py: It is inherited from Huggingface transformers. It is mainly modified for data processing. utils: It includes all the utilities. data_collator_sas.py: It […]

Read more