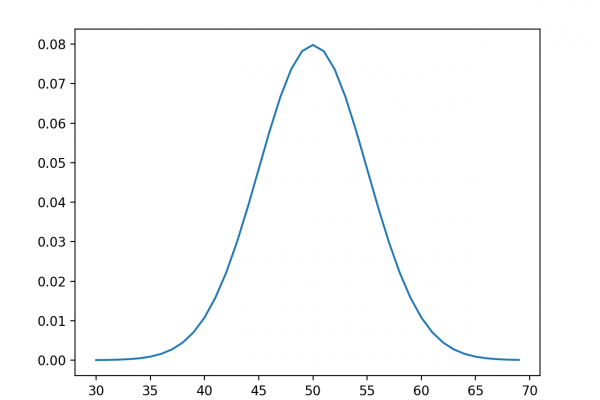

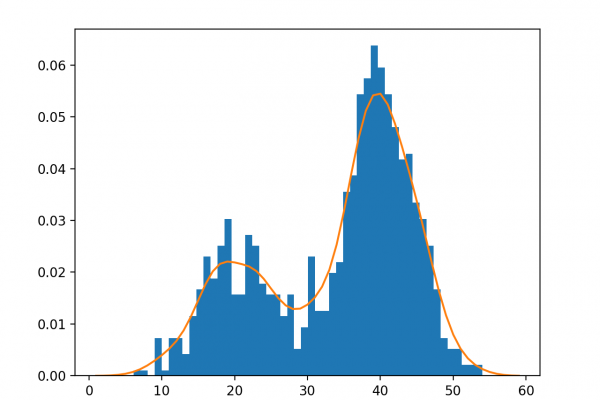

A Gentle Introduction to Probability Distributions

Last Updated on November 14, 2019 Probability can be used for more than calculating the likelihood of one event; it can summarize the likelihood of all possible outcomes. A thing of interest in probability is called a random variable, and the relationship between each possible outcome for a random variable and their probabilities is called a probability distribution. Probability distributions are an important foundational concept in probability and the names and shapes of common probability distributions will be familiar. The […]

Read more