How to Develop a Bidirectional LSTM For Sequence Classification in Python with Keras

Last Updated on August 27, 2020

Bidirectional LSTMs are an extension of traditional LSTMs that can improve model performance on sequence classification problems.

In problems where all timesteps of the input sequence are available, Bidirectional LSTMs train two instead of one LSTMs on the input sequence. The first on the input sequence as-is and the second on a reversed copy of the input sequence. This can provide additional context to the network and result in faster and even fuller learning on the problem.

In this tutorial, you will discover how to develop Bidirectional LSTMs for sequence classification in Python with the Keras deep learning library.

After completing this tutorial, you will know:

- How to develop a small contrived and configurable sequence classification problem.

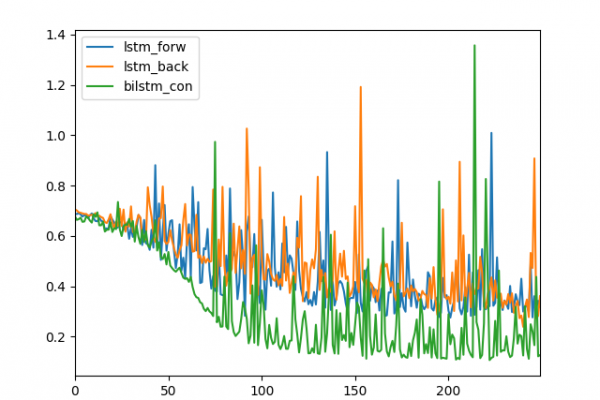

- How to develop an LSTM and Bidirectional LSTM for sequence classification.

- How to compare the performance of the merge mode used in Bidirectional LSTMs.

Kick-start your project with my new book Long Short-Term Memory Networks With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Jan/2020: Updated API for Keras 2.3 and TensorFlow 2.0.

To finish reading, please visit source site

To finish reading, please visit source site